The Avocado Pit (TL;DR)

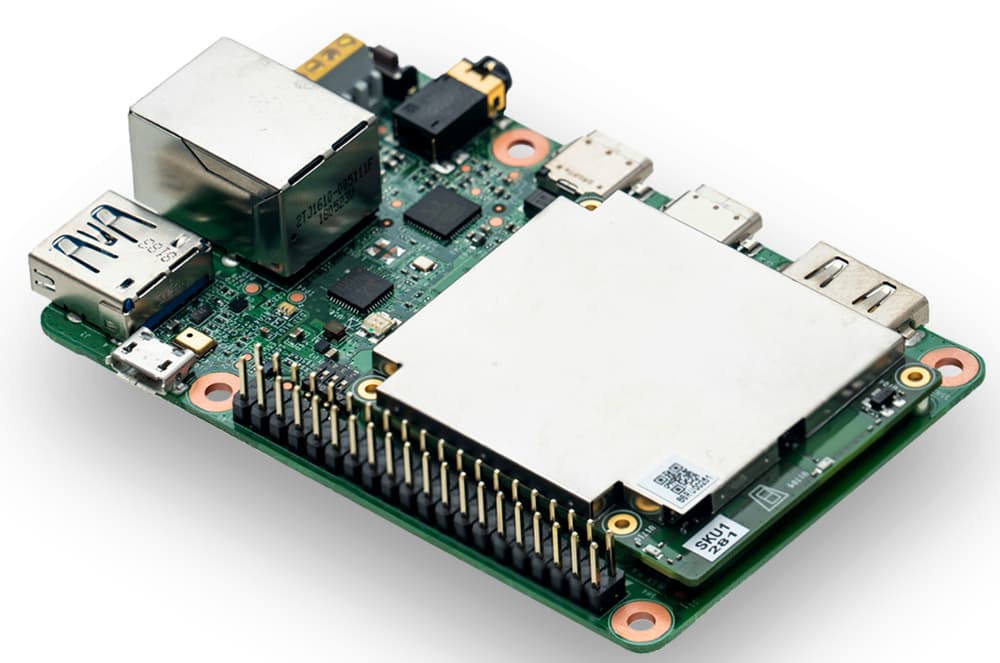

- 🎉 Google’s TPU (Tensor Processing Unit) is here to make AI faster and cooler.

- 🤖 Outshines traditional GPUs in AI tasks—time to up your AI game!

- 💰 Could change how AI hardware is bought and sold. Wallets, beware.

Why It Matters

Let's face it, GPUs have been the Beyoncé of AI hardware for a while now—always in the spotlight, always in demand. But Google's TPU is coming in like a solo artist ready to take the stage. It's specifically designed for AI workloads, which means it can perform tasks faster and more efficiently than the traditional GPUs. Think of it as the caffeine shot your AI has been craving.

What This Means for You

If you're dabbling in AI, this is your chance to get involved with cutting-edge hardware that could supercharge your projects. TPUs are optimized for machine learning tasks, so they can crunch data like a pro. For businesses, this means potentially lower costs and higher efficiency. For hobbyists, it means getting cool AI models to run without having to sell a kidney for a top-tier GPU.

Fresh Take

Google's TPU isn't just a new player in the game; it's a game-changer. With more companies investing in AI, having a dedicated chip like the TPU could set a new standard for how AI applications are developed and deployed. While GPUs will still have their place, TPUs might just be the new darling in the AI hardware world. So, keep an eye on this space; it's about to get interesting.

Read the full Barron's article → Click here